08 February 2023

AirPixel used in live broadcast of Super Bowl LVII

Wide-area camera tracking solution enables ground-breaking Augmented Reality graphics during world's largest sports broadcast

AirPixel successfully tracked the in-stadium SkyCam at State Farm Stadium during Super Bowl LVII to deliver accurate position and orientation data as well as the camera’s focus, iris and zoom values. The integration of this data enabled flawless, dynamic matching of live imagery with virtual imagery throughout the show.

AirPixel is highly scalable and easy to deploy, making it ideal for temporary installations within stadiums. It is unaffected by lighting changes or weather conditions, ensuring reliable data is available at any point in the production.

The Idea

The use of Augmented Reality (AR) in live broadcasts is not new, stats and advertising are regularly displayed on top of live camera footage. However, with a strong desire to continuously improve the audience's viewing experience, Fox wanted to push the boundaries by integrating complex 3D virtual imagery aligned with the live footage from a moving camera. And what better place to start than with the Super Bowl!

The Challenge of Accurate Camera Tracking in Live Stadium Broadcasts

To match live imagery with virtual imagery, you need to know the exact position and orientation of the physical camera. Additionally, for the graphics to interact realistically with the camera’s focus, iris and zoom, you also need to capture and integrate these values.

Getting position and orientation of a camera is known as camera tracking. Inside a broadcast studio, cameras are often tracked with systems based on (optical) markers. However, if you want to track a camera in a large open volume such as a football stadium with changing lighting conditions, these systems are very hard to operate reliably.

If a camera within a stadium is in a fixed position, on an encoded pedestal or crane, tracking values are relatively easy to obtain. However, this only provides the option for graphics to interact with imagery from a static camera position and does not capture camera values.

Many stadium shots now take advantage of an aerial camera mounted on cables that flies above the field, such as a SkyCam. It is a lot harder to get exact position and orientation of such a camera as it is moving at pace, in three dimensions, over a large area, and with bounce and motion within its cable mounting. This unpredictable motion is not detected by encoders on cable systems.

Tracking the Untrackable: Solving Camera Motion in Large-Scale Sports Arenas

To track a SkyCam, or other freely moving cameras such as a Steadicam, Racelogic developed a system called AirPixel. This is a system that combines Ultra-Wideband (UWB) radio signals with inertial sensors.

The principle of AirPixel is comparable to a GPS system, where a receiver calculates its position and orientation by communicating with a number of satellites. In the case of AirPixel, the receiver is called a ‘rover’ which is mounted on the camera, and the satellites are called ‘beacons’ which are mounted around the stadium.

By not relying on encoders or optical tracking, AirPixel is unaffected by changes in lighting or weather conditions. The combination of UWB and inertial sensors allows for high accuracy tracking of all camera motion across a wide-area, ensuring accurate data throughout a whole stadium.

Additionally, AirPixel integrates directly with the camera to capture all focus, iris and zoom values so that graphics can interact with camera generated movement as well as its physical movement.

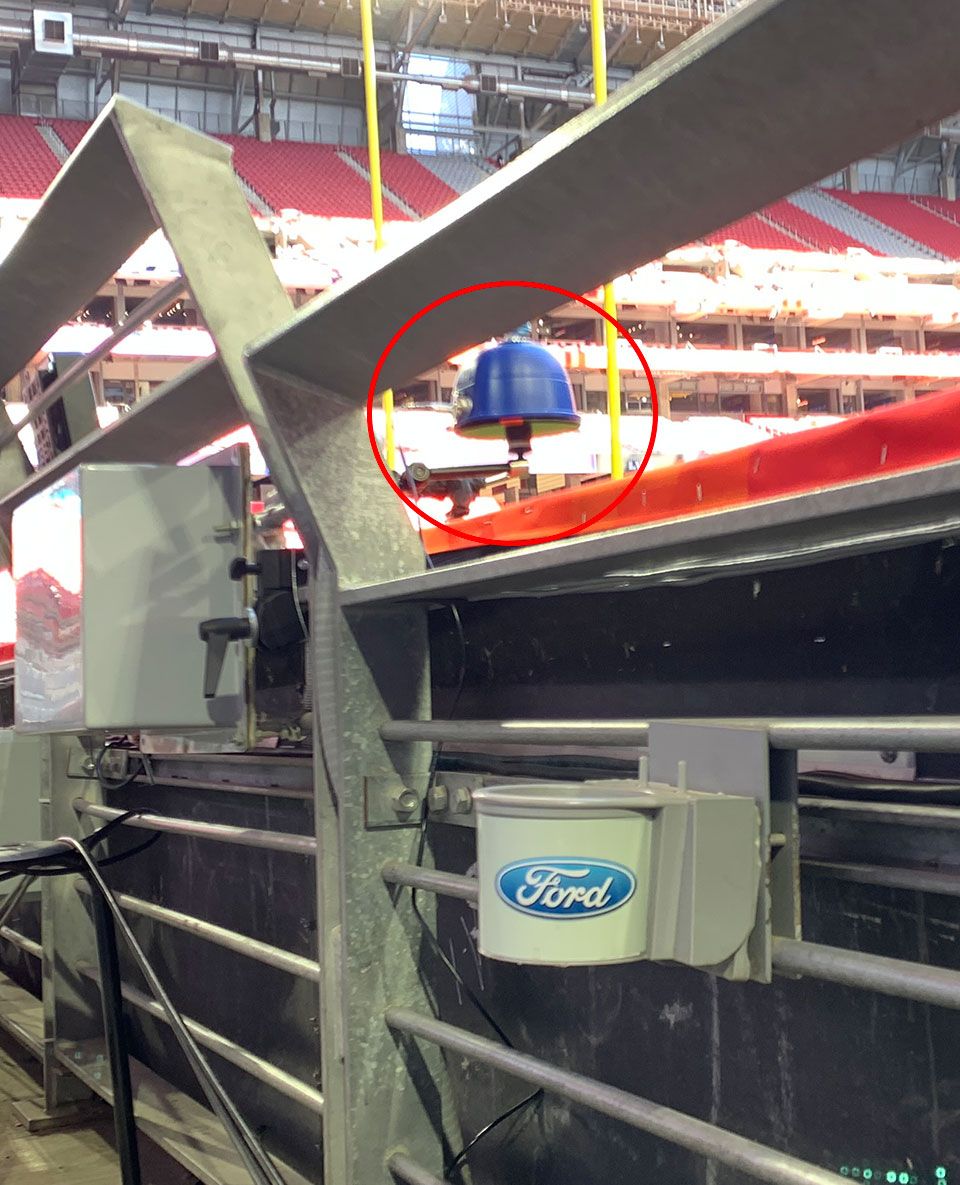

AirPixel beacons placed around the stadium

Skycam with AirPixel rover installed

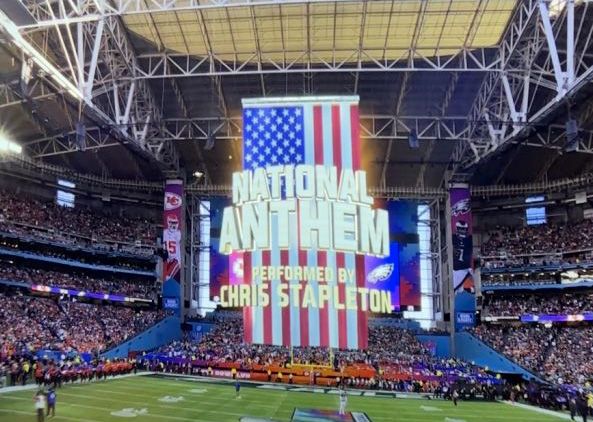

Graphics introducing the singing of the national anthem.

Installing AirPixel on Skycam

RACELOGIC has closely worked with Fox, Sony, Skycam and Silver Spoon Animation to integrate AirPixel into the existing workflow. In the build up to the 2023 Super Bowl the teams worked together to develop and test the system in various stadiums in the US.

In one of the most challenging broadcast environments, AirPixel delivered highly accurate, reliable data that saw groundbreaking graphics delivered to the audience at home. These included player line-ups displayed in the end zones, virtual scoreboards, and team and broadcaster logos.

The graphics also enhanced the entertainment elements, including introducing the singing of the national anthem (pictured).

AirPixel

Silverspoon Animation

Sony

Fox Sports

Skycam